Which Web Application Attack Is More Likely To Extract Privacy Data Elements Out Of A Database?

Alter is the new norm for the global healthcare sector. In fact, digitization of health and patient information is undergoing a dramatic and key shift in the clinical, operating and business models and generally in the earth of economy for the foreseeable future. This shift is existence spurred by crumbling populations and lifestyle changes; the proliferation of software applications and mobile devices; innovative treatments; heightened focus on intendance quality and value; and evidence-based medicine as opposed to subjective clinical decisions—all of which are leading to offer significant opportunities for supporting clinical decision, improving healthcare delivery, management and policy making, surveilling disease, monitoring adverse events, and optimizing handling for diseases affecting multiple organ systems [one, 2].

Equally noted above, big data analytics in healthcare carries many benefits, promises and presents great potential for transforming healthcare, yet it raises manifold barriers and challenges. Indeed, the concerns over the big healthcare data security and privacy are increased year-past-yr. Additionally, healthcare organizations found that a reactive, bottom-up, engineering-centric approach to determining security and privacy requirements is not acceptable to protect the organization and its patients [3].

Motivated thus, new information systems and approaches are needed to prevent breaches of sensitive information and other types of security incidents so equally to make constructive use of the big healthcare data.

In this newspaper, we discuss some interesting related works and present risks to the big health information security likewise as some newer technologies to redress these risks. Then, nosotros focus on the big data privacy issue in healthcare, by mentioning various laws and regulations established by unlike regulatory bodies and pointing out some feasible techniques used to ensure the patient's privacy. Thereafter, we provide some proposed techniques and approaches that were reported in the literature to deal with security and privacy risks in healthcare while identifying their limitations. Lastly, we offer conclusions and highlight the future directions.

Successful related works

Seamless integration of greatly diverse big healthcare information technologies tin not only enable us to gain deeper insights into the clinical and organizational processes but also facilitate faster and safer throughput of patients and create greater efficiencies and help amend patient menstruation, safety, quality of care and the overall patient feel no matter how costly it is.

Such was the case with Southward Tyneside NHS Foundation Trust, a provider of astute and community health services in northeast England that understands the importance of providing high quality, safety and compassionate care for the patients at all times, but needs a ameliorate understanding of how its hospitals operate to improve resource resource allotment and wait times and to ensure that any issues are identified early and acted upon [4].

Some other example is the UNC Health Care (UNCHC), which is a not-profit integrated healthcare organisation in North Carolina that has implemented a new system allowing clinicians to rapidly access and analyze unstructured patient data using natural-language processing. In fact, UNCHC has accessed and analyzed huge quantities of unstructured content contained in patient medical records to extract insights and predictors of readmission risk for timely intervention, providing safer treat loftier-risk patients and reducing re-admissions [v].

Moreover in the United states of america, the Indiana Health Information Exchange, which is a non-profit organisation, provides a secure and robust engineering network of wellness information linking more than than 90 hospitals, customs health clinics, rehabilitation centers and other healthcare providers in Indiana. It allows medical data to follow the patient hosted in one md office or but in a infirmary system [6].

One more instance is Kaiser Permanente medical network based in California. It has more than 9 million members, estimated to manage big volumes of information ranging from 26.5 Petabytes to 44 Petabytes. [7].

Big data analytics is used besides in Canada, due east.k. the infant hospital of Toronto. This hospital succeeded to improve the outcomes for newborns prone to serious hospital infections. Another instance is the Artemis project, which is a newborns monitoring platform designed mercy to a collaboration between IBM and the Plant of Technology of Ontario. It supported the acquisition and the storage of patients' physiological data and clinical data system data for the objective of online and real time assay, retrospective assay, and data mining [eight].

In Europe and exactly in Italian republic, the Italian medicines agency collects and analyzes a large corporeality of clinical information concerning expensive new medicines as part of a national profitability program. Based on the results, it may reassess the medicines prices and market access terms [9].

In the domain of mHealth, the Earth Health Organization has launched the projection "Exist Healthy Be mobile" in Senegal and nether the mDiabetes initiative it supports countries to set up large-calibration projects that apply mobile technology, in detail text messaging and apps, to control, prevent and manage non-communicable diseases such equally diabetes, cancer and heart illness [x]. mDiabetes is the first initiative to take reward of the widespread mobile technology to achieve millions of Senegalese people with health information and aggrandize admission to expertise and care. Launched in 2013, in Costa Rica that has been officially selected as the first country, the initiative is working on an mCessation for tobacco program for smoking prevention and helping smokers quit, an mCervical cancer program in Zambia and has plans to roll out mHypertension and mWellness programs in other countries.

After Europe, Canada, Australia, Russian federation, and Latin America, Sophia Genetics [11], global leader in data-driven medicine, appear at the recent 2017 Almanac Meeting of the American College of Medical Genetics and Genomics (ACMG) that its artificial intelligence has been adopted by African hospitals to advance patient intendance across the continent.

In Morocco for instance, PharmaProcess in Casablanca, ImmCell, The Al Azhar Oncology Middle and The Riad Biology Center in Rabat are some medical institutions at the forefront of innovation that take started integrating Sophia to speed and analyze genomic data to identify disease-causing mutations in patients' genomic profiles, and decide on the well-nigh effective care. As new users of SOPHIA, they become part of a larger network of 260 hospitals in 46 countries that share clinical insights across patient cases and patient populations, which feeds a knowledge-base of biomedical findings to accelerate diagnostics and care [12].

While the automations have led to better patient care workflow and reduce costs, it is also rising healthcare data to increase probability of security and privacy breaches. In 2016, CynergisTek has released the Redspin'south 7th annual breach report: Protected Wellness Information (PHI) [xiii] in which it has reported that hacking attacks on healthcare providers were increased 320% in 2016, and that 81% of records breached in 2016 resulted from hacking attacks specifically. Additionally, ransomware, divers as a type of malware that encrypts data and holds it hostage until a bribe demand is met, has identified as the most prominent threat to hospitals. Additional findings of this written report include:

-

325 large breaches of PHI, compromising xvi,612,985 individual patient records.

-

iii,620,000 breached patient records in the year's single largest incident.

-

forty% of large breach incidents involved unauthorized access/disclosure.

These findings point to a pressing need for providers to take a much more proactive and comprehensive approach to protecting their information assets and combating the growing threat that cyber attacks present to healthcare.

Several prosperous initiatives accept appeared to help the healthcare industry continually improve its ability to protect patient information.

In January 2014, for example, the White House, led by President Obama's Counselor John Podesta, undertook a 90-day review of big information and privacy. The review brought physical recommendations to maximize benefits and minimize risks of big data [14, 15], namely:

-

Policy attention should focus more on the bodily uses of big data and less on its collection and assay. Such existing policies are unlikely to yield effective strategies for improving privacy, or to be scalable over time.

-

Policy apropos privacy protection should be addressing the purpose rather than prescribing the mechanism.

-

Inquiry is needed in the technologies that help to protect privacy, in the social mechanisms that influence privacy preserving beliefs, and in the legal options that are robust to changes in technology and create appropriate balance among economic opportunity, national priorities, and privacy protection.

-

Increased education and preparation opportunities concerning privacy protection, including career paths for professionals. Programs that provide education leading to privacy expertise are essential and need encouragement.

-

Privacy protections should exist extended to non-U.s.a. citizens every bit privacy is a worldwide value that should be reflected in how the federal government handles personally identifiable data from not-US citizens [xvi].

The OECD Health Care Quality Indicators (HCQI) projection is responsible for a plan in 2013/2014 to develop tools to assist countries in balancing data privacy risks and risks from non developing and using health data. This programme includes developing a take a chance categorization of unlike types and uses of data and the promising practices that countries can deploy to reduce risks that direct affect anybody's daily life and enable data use [17].

Privacy and security concerns in large data

Security and privacy in big data are important bug. Privacy is often divers equally having the ability to protect sensitive information about personally identifiable health care information. It focuses on the employ and governance of individual's personal data like making policies and establishing authorization requirements to ensure that patients' personal information is being collected, shared and utilized in right ways. While security is typically defined every bit the protection against unauthorized access, with some including explicit mention of integrity and availability. It focuses on protecting data from pernicious attacks and stealing information for profit. Although security is vital for protecting data just it'south insufficient for addressing privacy. Table 1 focuses on additional departure betwixt security and privacy.

Security of big healthcare data

While healthcare organizations store, maintain and transmit huge amounts of data to back up the delivery of efficient and proper care, the downsides are the lack of technical back up and minimal security. Complicating matters, the healthcare manufacture continues to be i of the nigh susceptible to publicly disclosed information breaches. In fact, attackers tin utilize data mining methods and procedures to find out sensitive data and release information technology to the public and thus information breach happens. Whereas implementing security measures remains a complex process, the stakes are continually raised as the ways to defeat security controls become more sophisticated.

Appropriately, information technology is critical that organizations implement healthcare data security solutions that will protect important assets while as well satisfying healthcare compliance mandates.

A. Big data security lifecycle

In terms of security and privacy perspective, Kim et al. [18] fence that security in big data refers to three matters: information security, access control, and information security. In this regards, healthcare organizations must implement security measures and approaches to protect their big information, associated hardware and software, and both clinical and authoritative data from internal and external risks. At a projection'due south inception, the data lifecycle must be established to ensure that appropriate decisions are made virtually retentiveness, cost effectiveness, reuse and auditing of historical or new data [nineteen].

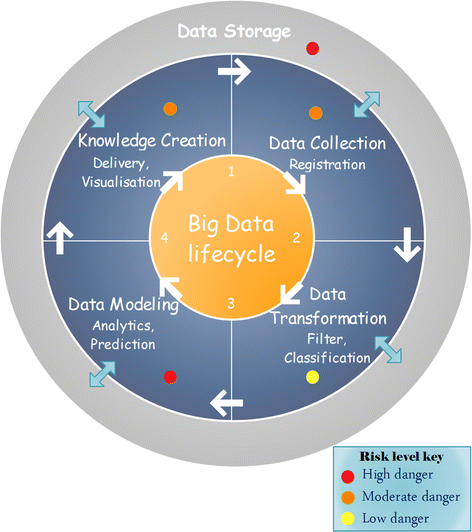

Yazan et al. [20] suggested a big data security lifecycle model extended from Xu et al. [21]. This model is designed to address the phases of the large data lifecycle and correlate threats and attacks that face large data environment within these phases, while [21] address big data lifecycle from user role perspective: data provider, data collector, data miner, and decision maker. The model proposed in [xx] comprised of iv interconnecting phases: data drove stage, data storage phase, data processing and assay, and knowledge creation.

Furthermore, CCW (The Chronic Conditions Data Warehouse) follows a formal information security lifecycle model, which consists of four core phases that serve to identify, assess, protect and monitor confronting patient data security threats. This lifecycle model is continually being improved with emphasis on constant attention and continual monitoring [21].

In this paper, nosotros advise a model that combines the phases presented in [xx] and phases mentioned in [21], in society to provide comprehend policies and mechanisms that ensure addressing threats and attacks in each step of big data life cycle. Figure 1 presents the main elements in big data lifecycle in healthcare.

Big information security life cycle in healthcare

-

Information collection stage This is the obvious first step. It involves collecting data from dissimilar sources in diverse formats. From a security perspective, securing big health information technology is a necessary requirement from the first phase of the lifecycle. Therefore, it is important to get together data from trusted sources, preserve patient privacy (there must be no attempt to identify the individual patients in the database) and make certain that this phase is secured and protected. Indeed, some mature security measures must be used to ensure that all data and information systems are protected from unauthorized access, disclosure, modification, duplication, diversion, destruction, loss, misuse or theft.

-

Information transformation phase One time the information is available, the first step is to filter and classify the data based on their structure and do any necessary transformations in gild to perform meaningful analysis. More broadly, data filtering, enrichment and transformation are needed to improve the quality of the data ahead of analytics or modeling phase and remove or appropriately deal with noise, outliers, missing values, duplicate information instances, etc. On the other side, the nerveless data may incorporate sensitive information, which makes extremely important to take sufficient precautions during data transformation and storing. In order to guarantee the safety of the nerveless data, the data should remain isolated and protected by maintaining access-level security and access control (utilizing an extensive list of directories and databases as a central repository for user credentials, application logon templates, countersign policies and client settings) [22], and defining some security measures like data anonymization approach, permutation, and data partitioning.

-

Data modeling stage Once the data has been collected, transformed and stored in secured storage solutions, the data processing analysis is performed to generate useful knowledge. In this phase, supervised data mining techniques such every bit clustering, classification, and association tin be employed for characteristic choice and predictive modeling. Further, there also exist several ensembles of learning techniques that amend accuracy and robustness of the final model. On the other side, it is crucial to provide secure processing environment. In fact, the focus of data miners in this phase is to use powerful data mining algorithms that can excerpt sensitive data. Therefore, the process of data mining and the network components in general, must be configured and protected against data mining based attacks and any security alienation that may happen, besides as make sure that but authorized staff work in this phase. This process helps eliminate some vulnerabilities and mitigates others to a lower risk level.

-

Knowledge creation phase Finally, the modeling phase comes up with new information and valued knowledges to be used past decision makers. These created knowledges are considered sensitive information, especially in a competitive environment. Indeed, healthcare organizations aware of their sensitive information (e.yard. patient personal data) non to be publicly released. Accordingly, security compliance and verification are a primary objective in this phase.

At all stages of big data lifecycle, it requires information storage, data integrity and data admission control.

B. Technologies in use

Various technologies are in use to ensure security and privacy of big healthcare information. Most widely used technologies are:

1) Authentication Hallmark is the deed of establishing or confirming claims made by or virtually the subject are true and authentic. It serves vital functions within any organization: securing access to corporate networks, protecting the identities of users, and ensuring that the user is really who he is pretending to exist.

The information authentication can pose special problems, especially homo-in-the-centre (MITM) attacks. Most cryptographic protocols include some form of endpoint hallmark specifically to prevent MITM attacks. For instance [23], transport layer security (TLS) and its predecessor, secure sockets layer (SSL), are cryptographic protocols that provide security for communications over networks such every bit the Internet. TLS and SSL encrypt the segments of network connections at the ship layer end-to-cease. Several versions of the protocols are in widespread use in applications like web browsing, electronic post, Internet faxing, instant messaging and voice-over-IP (VoIP). One tin can utilize SSL or TLS to cosign the server using a mutually trusted certification authority. Hashing techniques similar SHA-256 [24] and Kerberos mechanism based on Ticket Granting Ticket or Service Ticket can exist also implemented to accomplish authentication. Additionally, Bull Heart algorithm can be used for monitoring all sensitive data in 360°. This algorithm has been used to make sure data security and manage relations between original information and replicated data. It is also allowed only to an authorized person to read or write critical data. Paper [25] proposes a novel and simple authentication model using one time pad algorithm. It provides removing the communication of passwords between the servers. In a healthcare system, both healthcare data offered by providers and identities of consumers should exist verified at the entry of every access.

ii) Encryption Data encryption is an efficient means of preventing unauthorized access of sensitive data. Its solutions protect and maintain buying of information throughout its lifecycle—from the data center to the endpoint (including mobile devices used past physicians, clinicians, and administrators) and into the cloud. Encryption is useful to avert exposure to breaches such every bit packet sniffing and theft of storage devices.

Healthcare organizations or providers must ensure that encryption scheme is efficient, easy to utilize by both patients and healthcare professionals, and easily extensible to include new electronic health records. Furthermore, the number of keys agree by each party should be minimized.

Although various encryption algorithms have been adult and deployed relatively well (RSA, Rijndael, AES and RC6 [24, 26, 27], DES, 3DES, RC4 [28], Thought, Blowfish …), the proper choice of suitable encryption algorithms to enforce secure storage remains a hard problem.

3) Information masking Masking replaces sensitive data elements with an unidentifiable value. It is not truly an encryption technique so the original value cannot exist returned from the masked value. It uses a strategy of de-identifying data sets or masking personal identifiers such as name, social security number and suppressing or generalizing quasi-identifiers like engagement-of-birth and zip-codes. Thus, information masking is one of the most popular approach to live data anonymization. k-anonymity get-go proposed past Swaney and Samrati [29, thirty] protects confronting identity disclosure but failed to protect against attribute disclosure. Truta et al. [31] have presented p-sensitive anonymity that protects against both identity and attribute disclosure. Other anonymization methods fall into the classes of adding noise to the data, swapping cells within columns and replacing groups of k records with k copies of a single representative. These methods have a common problem of difficulty in anonymizing high dimensional data sets [32, 33].

A meaning do good of this technique is that the price of securing a big data deployment is reduced. As secure data is migrated from a secure source into the platform, masking reduces the need for applying boosted security controls on that data while it resides in the platform.

four) Access control Once authenticated, the users can enter an information system but their access will still exist governed by an access control policy which is typically based on privileges and rights of each practitioner authorized by patient or a trusted third political party. It is then, a powerful and flexible mechanism to grant permissions for users. It provides sophisticated authorisation controls to ensure that users can perform only the activities for which they take permissions, such as data admission, job submission, cluster administration, etc.

A number of solutions accept been proposed to address the security and admission control concerns. Function-based admission control (RBAC) [34] and attribute-based admission control (ABAC) [35, 36] are the nigh popular models for EHR. RBAC and ABAC accept shown some limitations when they are used alone in medical system. Paper [37] proposes as well a cloud-oriented storage efficient dynamic access control scheme ciphertext based on the CP-ABE and a symmetric encryption algorithm (such as AES). To satisfy requirements of fine-grained admission command yet security and privacy preserving, we propose adopting technologies in conjunction with other security techniques, east.g. encryption, and access control methods.

5) Monitoring and auditing Security monitoring is gathering and investigating network events to grab the intrusions. Inspect means recording user activities of the healthcare system in chronological lodge, such as maintaining a log of every admission to and modification of data. These are two optional security metrics to measure and ensure the safe of a healthcare system [38].

Intrusion detection and prevention procedures on the whole network traffic is quite tricky. To address this problem, a security monitoring architecture has been developed via analyzing DNS traffic, IP flow records, HTTP traffic and honeypot data [39]. The suggested solution includes storing and processing data in distributed sources through data correlation schemes. At this stage, three likelihood metrics have been calculated to identify whether domain name, package or flow is malicious. Depending on the score obtained through this adding, an warning occurs in detection arrangement or procedure terminate past prevention system. Co-ordinate to performance analysis with open source big information platforms on electronic payment activities of a company data, Spark and Shark produce fast and steady results than Hadoop, Hive and Pig [40].

Big data network security systems should be discover abnormalities quickly and identify correct alerts from heterogeneous data. Therefore, a big data security effect monitoring arrangement model has been proposed which consists of iv modules: data collection, integration, analysis, and interpretation [41]. Information drove includes security and network devices logs and event information. Information integration procedure is performed by information filtering and classifying. In data analysis module, correlations and association rules are determined to catch events. Finally, data estimation provides visual and statistical outputs to knowledge database that makes decisions, predicts network behavior and responses events.

Privacy of large healthcare data

The invasion of patient privacy is considered as a growing concern in the domain of large data analytics due to the emergence of advanced persistent threats and targeted attacks against information systems. As a result, organizations are in claiming to address these different complementary and critical issues. An incident reported in the Forbes magazine raises an alarm over patient privacy [42]. In the written report, it mentioned that Target Corporation sent baby care coupons to a teen-age girl unbeknown to her parents. This incident impels analytics and developers to consider privacy in large data. They should exist able to verify that their applications accommodate to privacy agreements and that sensitive data is kept private regardless of changes in applications and/or privacy regulations.

Privacy of medical data is and so an important cistron which must be seriously considered. We cite in the next paragraph some of laws on the privacy protection worldwide.

Data protection laws

More than ever it is crucial that healthcare organizations manage and safeguard personal data and accost their risks and legal responsibilities in relation to processing personal data, to address the growing thicket of applicative data protection legislation. Dissimilar countries have different policies and laws for data privacy. Data protection regulations and laws in some of the countries forth with salient features are listed in Table ii beneath.

Privacy preserving methods in big information

Few traditional methods for privacy preserving in big data are described in cursory here. Although these techniques are used traditionally to ensure the patient'due south privacy [43,44,45], their demerits led to the advent of newer methods.

A. De-identification

De-identification is a traditional method to prohibit the disclosure of confidential data by rejecting any information that can identify the patient, either by the first method that requires the removal of specific identifiers of the patient or by the second statistical method where the patient verifies himself that enough identifiers are deleted. However, an assailant can possibly go more external data help for de-identification in big information. As a result, de-identification is not sufficient for protecting big data privacy. It could be more feasible through developing efficient privacy-preserving algorithms to assist mitigate the risk of re-identification. The concepts of grand-anonymity [46,47,48], l-diversity [47, 49, 50] and t-closeness [46, 50] have been introduced to heighten this traditional technique.

-

k-anonymity In this technique, the college the value of k, the lower will be the probability of re-identification. However, it may lead to distortions of information and hence greater information loss due to g-anonymization. Furthermore, excessive anonymization can make the disclosed information less useful to the recipients because some of the analysis becomes impossible or may produce biased and erroneous results. In k-anonymization, if the quasi-identifiers containing information are used to link with other publicly bachelor information to place individuals, and then the sensitive aspect (like disease) as one of the identifier will exist revealed. Tabular array iii is a non-anonymized database consisting of the patient records of some fictitious hospital in Casablanca.

Table 3 A non-anonymized database comprising of the patient records

In that location are six attributes along with v records in this information. There are ii regular techniques for accomplishing k-anonymity for some value of yard.

The get-go i is Suppression: in this method, an asterisk '*' could supplant certain values of the attributes. All or some of the values of a cavalcade may be replaced past '*'. In the anonymized Table 4, replaced each of the values in the 'Name' attribute and all the values in the 'Religion' attribute by a '*'.

The 2nd method is Generalization: In this method, individual values of attributes are replaced with a broader category. For instance, The Nativity field has been generalized to the yr (eastward.g. the value '21/11/1972' of the aspect 'Nascency' may exist supplanted past the twelvemonth '1972'). The ZIP Code field has been as well generalized to indicate the wider area (Casablanca).

Table 4 has 2-anonymity with respect to the attributes 'Birth', 'Sexual activity' and 'ZIP Code' since for any alloy of these attributes found in any row of the table there are always no less than two rows with those verbal attributes. Each "quasi-identifier" tuple occurs in at least thou records for a dataset with k-anonymity. k-bearding data can nonetheless be helpless against attacks similar unsorted matching set on, temporal attack, and complementary release attack [50, 51]. On the bright side, the complexity of rendering relations of individual records chiliad-anonymous, while minimizing the amount of information that is not released and simultaneously ensure the anonymity of individuals up to a grouping of size k, and withhold a minimum corporeality of information to achieve this privacy level and this optimization trouble is NP-difficult [52].

Various measures have been proposed to quantify information loss acquired by anonymization, but they exercise not reflect the bodily usefulness of data [53, 54]. Therefore, we motility towards L-diversity strategy of data anonymization.

-

L-multifariousness Information technology is a form of grouping based anonymization that is utilized to safeguard privacy in data sets by diminishing the granularity of data representation. This model (Singled-out, Entropy, Recursive) [46, 47, 51] is an extension of the 1000-anonymity which utilizes methods including generalization and suppression to reduce the granularity of data representation in a way that whatsoever given record maps onto at least k dissimilar records in the information. The fifty-diversity model handles a few of the weaknesses in the g-anonymity model in which protected identities to the level of thou-individuals is not equal to protecting the corresponding sensitive values that were generalized or suppressed. The problem with this method is that it depends upon the range of sensitive attribute. If want to make information L-diverse though sensitive attribute has non as much as dissimilar values, fictitious information to exist inserted. This fictitious data will better the security but may result in problems amid analysis. Equally a result, L-diversity method is also a subject to skewness and similarity set on [51] and thus tin can't foreclose attribute disclosure.

-

T-closeness Is a further comeback of l-multifariousness group based anonymization. The t-closeness model (equal/hierarchical distance) [46, fifty] extends the l-diversity model by treating the values of an aspect distinctly, taking into business relationship the distribution of data values for that aspect. The main advantage of this technique is that it intercepts aspect disclosure, and its problem is that as size and variety of data increase, the odds of re-identification increase too.

B. HybrEx

Hybrid execution model [55] is a model for confidentiality and privacy in cloud computing. Information technology utilizes public clouds just for an organization's non-sensitive information and ciphering classified as public, i.e., when the organization declares that there is no privacy and confidentiality risk in exporting the data and performing ciphering on information technology using public clouds, whereas for an organization's sensitive, individual data and computation, the model executes their individual cloud. Moreover, when an awarding requires admission to both the private and public data, the awarding itself as well gets partitioned and runs in both the individual and public clouds. It considers data sensitivity earlier a task's execution and provides integration with safe.

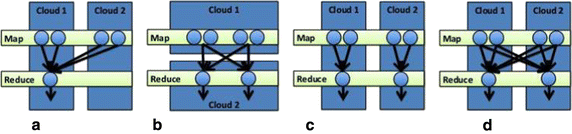

The four categories in which HybrEx MapReduce enables new kinds of applications that use both public and individual clouds are equally shown in Fig. 2:

The 4 Execution categories for HybrEx MapReduce [62]. a Map hybrid. b Horizontal partitioning. c Vertical sectionalization. d Hybrid

- ane.

Map hybrid (1a) The map phase is executed in both the public and the private clouds while the reduce stage is executed in only one of the clouds.

- ii.

Vertical partitioning (1b) Map and reduce tasks are executed in the public cloud using public information as the input, shuffle intermediate data amongst them, and store the consequence in the public cloud. The same work is done in the private deject with private data. The jobs are processed in isolation.

- 3.

Horizontal division (1c) The map phase is executed but in public clouds, while the reduce stage is executed in a individual cloud.

- 4.

Hybrid (1d) The map phase and the reduce phase are executed on both public and private clouds. Data transmission among the clouds is besides possible.

The problem with HybridEx is that it does non deal with the key that is generated at public and private clouds in the map phase and that it deals only with deject every bit an antagonist [55].

C. Identity based anonymization

It is a type of information sanitization whose intent is privacy protection. It is the process of either encrypting or removing personally identifiable data from data sets, so that the people whom the data describe remain bearding. The main difficulty with this technique involves combining anonymization, privacy protection, and big data techniques [56] to analyze usage data while protecting the identities.

Intel Human Factors Engineering team needed to protect Intel employees' privacy using web page access logs and big data tools to heighten convenience of Intel'due south heavily used internal web portal. They were required to remove personally identifying information (PII) from the portal'due south usage log repository only in a way that did not influence the utilization of big data tools to do analysis or the ability to re-identify a log entry in order to investigate unusual behavior.

To see the meaning benefits of Deject storage [57], Intel created an open up architecture for anonymization [56] that allowed a variety of tools to be utilized for both de-identifying and re-identifying web log records. In the implementing compages process, enterprise data has properties unlike from the standard examples in anonymization literature [58]. Intel also plant that in spite of masking obvious Personal Identification Data like usernames and IP addresses, the anonymized data was defenseless against correlation attacks. Subsequently exploring the tradeoffs of correcting these vulnerabilities, they found that User Agent information strongly correlates to individual users. This is a example study of anonymization implementation in an enterprise, describing requirements, implementation, and experiences encountered when utilizing anonymization to protect privacy in enterprise data analyzed using big information techniques. This investigation of the quality of anonymization used k-anonymity based metrics. Intel used Hadoop to analyze the anonymized information and acquire valuable results for the Human being Factors analysts [59, 60]. At the aforementioned time, it learned that anonymization needs to be more than just masking or generalizing certain fields—anonymized datasets need to exist advisedly analyzed to determine whether they are vulnerable to attack.

Summary on recent approaches used in large data privacy

In this paper, we have investigated the security and privacy challenges in big information, by discussing some existing approaches and techniques for achieving security and privacy in which healthcare organizations are probable to be highly beneficial. In this section, we focused on citing some approaches and techniques presented in different papers with accent on their focus and limitations (Tabular array 5). Paper [61] for case, proposed privacy preserving information mining techniques in Hadoop. Paper [67] introduced also an efficient and privacy-preserving cosine similarity computing protocol and paper [68] discussed how an existing approach "differential privacy" is suitable for big data. Moreover, newspaper [69] suggested a scalable approach to anonymize big-calibration data sets. Paper [70] proposed diverse privacy issues dealing with big data applications, while paper [71] proposed an anonymization algorithm to speed up anonymization of big data streams. In addition, newspaper [72] suggested a novel framework to accomplish privacy-preserving auto learning and paper [73] proposed methodology provides information confidentiality and secure data sharing. All these techniques and approaches accept shown some limitations.

These increased complexity and limits make the new models more difficult to interpret and their reliability less easy to assess, compared to previous models.

Which Web Application Attack Is More Likely To Extract Privacy Data Elements Out Of A Database?,

Source: https://journalofbigdata.springeropen.com/articles/10.1186/s40537-017-0110-7

Posted by: guitierrezbessithomfor.blogspot.com

0 Response to "Which Web Application Attack Is More Likely To Extract Privacy Data Elements Out Of A Database?"

Post a Comment